Unlocking the intricacies of measuring electrical currents often leads us to delve into the realm of analog instruments, where precision intertwines with reliability. Within the expansive domain of technical documentation, there lies a treasure trove of insights waiting to be unearthed. Let’s embark on a journey through the labyrinth of specifications and performance characteristics, navigating the landscape of current measurement with finesse and clarity.

Embarking on this voyage through the narrative of electrical instrumentation, we encounter a cornerstone of analog technology, a testament to the enduring relevance of traditional methods in a digital era. Within these pages lie not just figures and diagrams but a narrative thread connecting generations of engineers and enthusiasts. As we decipher the language of specifications, let us not merely observe but immerse ourselves in the story they weave, revealing the essence of current measurement beyond mere numbers.

Unveiling the narrative encoded within the fabric of technical literature, we peel back the layers of abstraction to uncover the essence of analog current gauges. Beyond the realm of mere functionality lies a rich tapestry of history and innovation, where each specification is a testament to human ingenuity and the pursuit of precision. Join us as we navigate the corridors of documentation, shedding light on the nuances that define the artistry of current measurement.

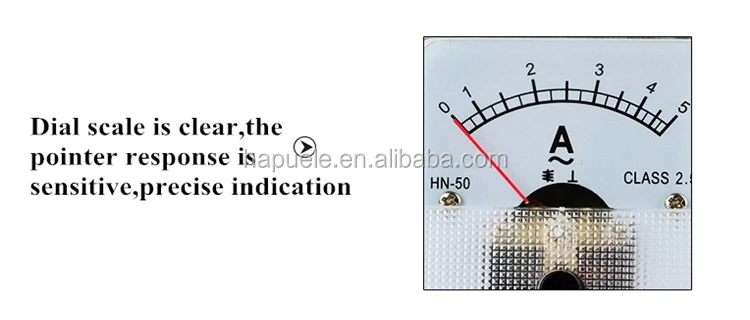

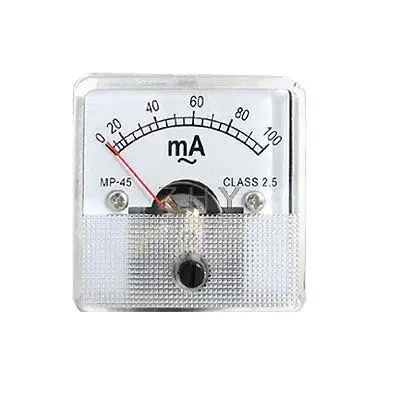

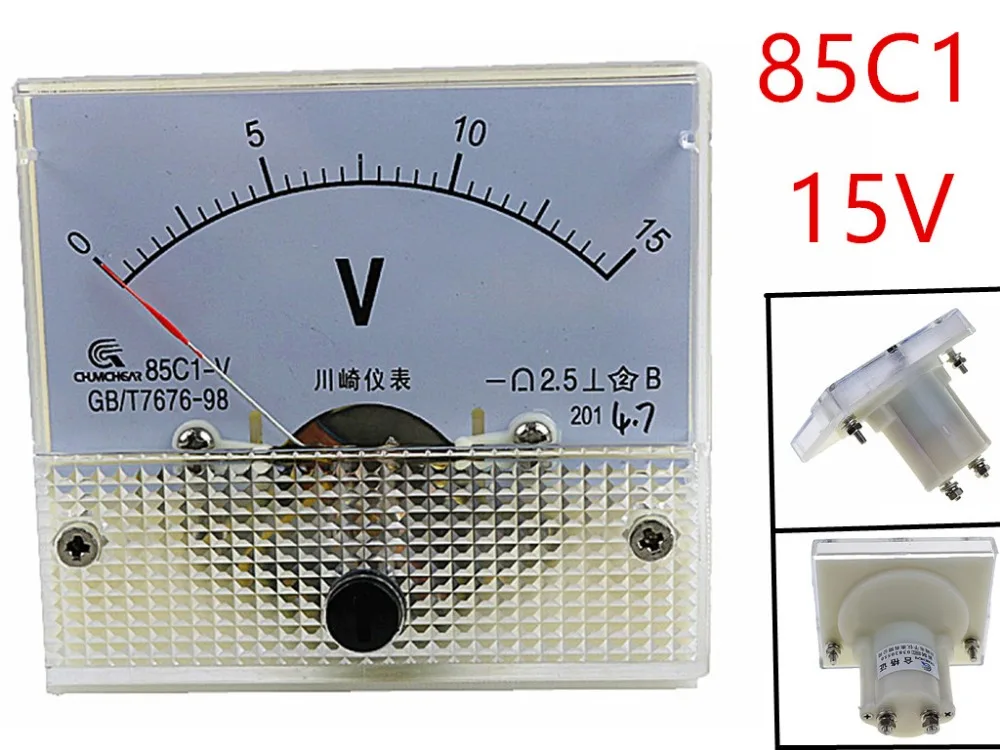

Understanding Specifications of Traditional Current Measurement Instruments

In the realm of electrical instrumentation, there exists a class of devices designed specifically for measuring current. This section delves into unraveling the intricacies of these measurement tools, shedding light on their technical specifications and intricacies. By grasping the nuances of these specifications, users can make informed decisions regarding their application and suitability for various electrical measurement tasks.

Parameters Deciphered:

Within the realm of current measurement instruments, there lies a spectrum of parameters that dictate their functionality and performance. These parameters encompass aspects such as accuracy, sensitivity, range, and resolution. Understanding each parameter in detail elucidates how effectively a device can capture and represent current values within a given circuit.

Accuracy Unveiled:

At the core of any measurement instrument lies the concept of accuracy. It denotes the degree of closeness between the measured value and the true value of the quantity being measured. Delving deeper, one encounters terms like linearity, hysteresis, and repeatability, each contributing to the overall accuracy of the instrument. Unraveling these facets provides insights into the instrument’s reliability and precision under varying conditions.

Sensitivity and Resolution:

Another pivotal aspect of current measurement instruments is sensitivity, which defines the smallest change in current that the instrument can detect. Coupled with sensitivity is resolution, delineating the smallest increment of current that can be displayed or recorded by the instrument. Navigating through these specifications elucidates the instrument’s capability to discern subtle variations in current, crucial for applications demanding high precision.

Range Exploration:

The range of a current measurement instrument delineates the span of current values it can effectively measure. This parameter plays a pivotal role in determining the instrument’s applicability across diverse electrical circuits. By comprehending the specified range, users can ascertain whether the instrument aligns with the current levels prevalent within their intended application scenario.

Environmental Considerations:

Beyond the core specifications, factors such as operating temperature, humidity, and environmental conditions exert influence on the performance and longevity of current measurement instruments. Evaluating these considerations empowers users to deploy the instrument optimally, mitigating risks associated with environmental variations.

Conclusion:

In essence, comprehending the intricacies of current measurement instrument specifications is imperative for leveraging their full potential in diverse electrical applications. By unraveling the nuances of accuracy, sensitivity, range, and environmental factors, users can make informed decisions, ensuring optimal performance and reliability in their measurement endeavors.

Deciphering Technical Parameters

Understanding the intricacies of technical specifications is paramount when delving into the intricacies of a device’s functionality. In this section, we embark on a journey to unravel the key metrics that underpin the operational capabilities of the instrument at hand. Through a meticulous examination of its performance indicators and characteristics, we aim to shed light on the nuances that define its prowess.

| Parameter | Description |

|---|---|

| Accuracy | Refers to the degree of precision in measurement, indicating the instrument’s ability to provide readings close to the true value. |

| Resolution | Denotes the smallest incremental change in the measured quantity that the instrument can detect and display. |

| Range | Defines the span of values over which the instrument can effectively operate, encompassing both minimum and maximum measurable quantities. |

| Response Time | Represents the duration required for the instrument to register a change in the measured parameter and reflect it in the displayed output. |

| Overload Capacity | Indicates the maximum level of input signal or current that the instrument can endure without sustaining damage. |

| Temperature Coefficient | Specifies the extent to which the instrument’s performance is influenced by fluctuations in temperature, typically expressed in terms of percentage change per degree Celsius. |

By comprehending these fundamental parameters, users can navigate through the labyrinth of technical specifications with confidence, enabling informed decisions regarding the suitability of the instrument for their intended applications.

Optimizing Performance: Calibration Techniques

In the pursuit of enhancing device accuracy and efficiency, the focus shifts towards refining the instrument’s precision through meticulous adjustment methods. This section delves into strategies aimed at fine-tuning the instrument’s readings, ensuring reliability and consistency in measurement outcomes.

Calibration Methodologies

Calibration stands as the cornerstone of precision enhancement, encompassing a variety of methodologies tailored to suit different instruments and environments. By employing sophisticated adjustment techniques, practitioners aim to align the instrument’s output with known standards, thus mitigating inaccuracies stemming from environmental factors or inherent device variations.

Data Analysis and Adjustment

Central to the calibration process is the comprehensive analysis of collected data, allowing for informed adjustments to be made. Through statistical analysis and trend identification, subtle deviations can be pinpointed and corrected, ensuring the instrument operates within specified tolerances. This iterative approach to calibration enables continual refinement, optimizing performance over time.

| Technique | Description |

|---|---|

| Zero Offset Adjustment | Corrects inherent bias in measurement, ensuring accurate readings at zero input. |

| Scaling Factor Optimization | Adjusts the instrument’s sensitivity to align with expected measurement ranges, enhancing precision across varying inputs. |

| Temperature Compensation | Accounts for thermal effects on instrument components, maintaining accuracy across temperature fluctuations. |

Fine-tuning Accuracy for Precise Measurements

Ensuring optimal precision in measurement readings is paramount for any device designed to gauge electrical currents. This section delves into the meticulous process of refining accuracy to achieve the most reliable and exact results.

Attaining pinpoint precision involves a series of intricate adjustments and calibrations that fine-tune the instrument’s sensitivity and responsiveness. By meticulously optimizing the device’s internal mechanisms, users can trust the integrity of their measurements even in the most demanding scenarios.

Through meticulous calibration procedures and sensitivity adjustments, the instrument can discern minute variations in current flow with exceptional accuracy. These meticulous adjustments empower users to obtain reliable readings, even when dealing with subtle fluctuations in electrical signals.

Furthermore, the pursuit of precision extends beyond mere technical adjustments; it embodies a commitment to excellence in measurement. By fine-tuning the instrument’s accuracy, users can confidently rely on its readings to make informed decisions and ensure the integrity of their electrical systems.

Enhancing Safety: Overload Protection Mechanisms

In the realm of instrumentation security, it becomes imperative to implement robust mechanisms that safeguard against potential hazards stemming from excessive current loads. This segment delves into the pivotal strategies devised to fortify safety measures, shielding the instrument and its surroundings from the perils of overcurrent conditions.

One fundamental aspect of ensuring safety in current measurement devices involves integrating fail-safe mechanisms adept at swiftly detecting and mitigating instances of overloading. These protective features operate seamlessly to avert catastrophic consequences, thereby fostering a secure operational environment.

- Current Limiting Circuitry: Employing sophisticated circuitry, these mechanisms cap the maximum current threshold, preventing the instrument from being subjected to levels beyond its designated capacity.

- Automatic Shutdown Protocols: In the event of a detected overload, automated shutdown protocols engage, promptly ceasing device operation to forestall any potential damage or hazards.

- Visual Indicators: Implementing intuitive visual cues, such as LED indicators, enables users to readily discern instances of overloading, facilitating timely intervention and risk mitigation.

- Thermal Protection Systems: Incorporating thermal safeguards, these systems monitor temperature fluctuations induced by excessive current, triggering protective measures to avert overheating and potential damage to the instrument.

By amalgamating these proactive measures into the design and functionality of current measurement instruments, manufacturers uphold a steadfast commitment to safety, ensuring optimal performance while safeguarding against the inherent risks posed by overcurrent scenarios.